When it comes to Federated Learning frameworks we typically find two leading open source projects - Apache Wayang [2] (maintained by databloom) and Flower [3] (maintained by Adap). And at the first view both frameworks seem to do the same. But, as usual, the 2nd view tells another story.

How does Flower differ from Wayang?

Flower is a federated learning system, written in Python and supports a large number of training and AI frameworks. The beauty of Flower is the strategy concept [4]; the data scientist can define which and how a dedicated framework is used. Flower delivers the model to the desired framework and watches the execution, gets the calculations back and starts the next cycle. That makes Federated Learning in Python easy, but also limits the use at the same time to platforms supported by Python.

Flower has, as far as I could see, no data query optimizer; an optimizer understands the code and splits the model into smaller pieces to use multiple frameworks at the same time (model parallelism).

Combine Wayang and Flower and build a Federated GenAI and LLM Platform

How to build a chatbot system, which serves multiple functions and customers across the world, like in a bank? A chatbot stack typically uses NLP combined with multiple data source to provide a natural communication between humans and machines. The demand of Machine-Human interaction and human based communication has considerably increased and the forecasts of Gartner are a testament to it.

"Natural language processing is a subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data" (Wikipedia).

The typical infrastructure we have to take into account is like a hyper grown forest: We have multiple data sources, typically reaching from data warehouses over RDBMS systems, pretty closed data sources like financial transaction stores, customer bank data, credit scores etc. The sources are mostly not the most modern, sometimes don’t even have connection points - like DWH systems, which are typically run with 90+% utilization.

Here comes Wayang into the game. With Wayang we can easily connect to each of the systems we'd need, and we automatically use already available data processing frameworks and engines like Spark, Kafka or Flink (and their commercial counterparts).

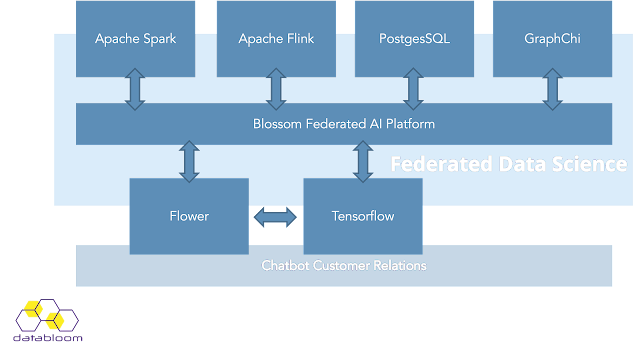

Now the fun part with Flower: we plug Flower to Wayang, and voilà - problem solved! The architecture could look like:

import wayang as wayang

import flwr as fl

import tensorflow as tf

context = wayang.context(env="federated")

transactions = context.read("url to transaction") \

.filter( transactionFilter )

input_flower = context.read("url to customer table") \

.filter( customerFilter ) \

.join (transactions ) \

.map ( convertToVector ) \

.toNumpy()

context.runFlower(

input_flower, \

server=fl.server.start_server("0.0.0.0:8080", config={"num_rounds": 3}) \

client=fl.client.start_numpy_client("0.0.0.0:8080", client=FlowerImplementedClient())

flowerEngine=tf

)

Flower takes care of the chatbot communication, the ML model and the execution with TF (Tensorflow) or any other supported ML framework, delivers the outcome to Wayang. Wayang now takes care of enriching the model with information from deeper backend systems and stream the output back to Flower, and Flower takes care of the next iteration with TensorFlow (TF).

This architecture is the backbone for an extensive LLM systems using the best tools available, providing access to multiple data sources - Federated Learning. This stack is future proof, both frameworks are built with pluggable extension support from the beginning. That means: whatever comes in the future, that stack can handle it. Even quantum computing AI training will be easily adoptable as a plugin.

Conclusion

To build cutting edge AI and machine learning / LLM stacks is not an area only the biggest data companies in the world can handle. With this approach we guarantee data sustainability, unmatched data privacy and enable digital transformation on a completely new level.

[1] https://cacm.acm.org/magazines/2020/12/248796-federated-learning-for-privacy-preserving-ai/fulltext[2] https://wayang.apache.org/documentation.html

[3] https://github.com/adap/flower

Comments

Post a Comment